This document captures these considerations and best practices that we have learnt based on working with our customers. While technically a single ADLS Gen2 could solve your business needs, there are various reasons why a customer would choose multiple storage accounts, including, but not limited to the following scenarios in the rest of this section. In addition to ensuring that there is enough isolation between your development and production environments requiring different SLAs, this also helps you track and optimize your management and billing policies efficiently. this would be raw sales data that is ingested from Contosos sales management tool that is running in their on-prem systems. Azure provides a range of analytics services, allowing you to process, query and analyze data using Spark, MapReduce, SQL querying, NoSQL data models, and more. While the end consumers have control of this workspace, ensure that there are processes and policies to clean up data that is not necessary using policy based DLM for e.g., the data could build up very easily. This section provides key considerations that you can use to manage and optimize the cost of your data lake.

Raw data: This is data as it comes from the source systems. azure storage data options blob If you want to optimize for ease of management, specially if you adopt a centralized data lake strategy, this would be a good model to consider. Query acceleration lets you filter for the specific rows and columns of data that you want in your dataset by specifying one more predicates (think of these as similar to the conditions you would provide in your WHERE clause in a SQL query) and column projections (think of these as columns you would specify in the SELECT statement in your SQL query) on unstructured data. A subscription is associated with limits and quotas on Azure resources, you can read about them here. Data that can be shared globally across all regions E.g. You can find more information about the access control here. NetApp Cloud Volumes ONTAP, the leading enterprise-grade storage management solution, delivers secure, proven storage management services on AWS, Azure and Google Cloud.

Cloud Volumes ONTAP supports up to a capacity of 368TB, and supports various use cases such as file services, databases, DevOps or any other enterprise workload, with a strong set of features including high availability, data protection, storage efficiencies, Kubernetes integration, and more. Inside a zone, choose to organize data in folders according to logical separation, e.g. Cloud Volumes ONTAP supports advanced features for managing SAN storage in the cloud, catering for NoSQL database systems, as well as NFS shares that can be accessed directly from cloud big data analytics clusters. What are the various analytics workloads that Im going to run on my data lake? log messages from servers) or aggregate it (E.g. You will create the /logs directory and create two AAD groups LogsWriter and LogsReader with the following permissions. The solution integrates Blob Storage with Azure Data Factory, a tool for creating and running extract, transform, load (ETL) and extract, load and transform (ELT) processes. 32 ACLs (effectively 28 ACLs) per file, 32 ACLs (effectively 28 ACLs) per folder, default and access ACLs each. E.g. While ADLS Gen2 storage is not very expensive and lets you store a large amount of data in your storage accounts, lack of lifecycle management policies could end up growing the data in the storage very quickly even if you dont require the entire corpus of data for your scenarios. Use Azure Data Factory to migrate data from an on-premises Hadoop cluster to ADLS Gen2(Azure Storage), Use Azure Data Factory to migrate data from an AWS S3 to ADLS Gen2(Azure Storage), Securing access to ADLS Gen2 from Azure Databricks, Understanding access control and data lake configurations in ADLS Gen2. In most cases, you should have the region in the beginning of your directory structure, and the date at the end.

To ensure we have the right context, there is no silver bullet or a 12 step process to optimize your data lake since a lot of considerations depend on the specific usage and the business problems you are trying to solve. Optimize data access patterns reduce unnecessary scanning of files, read only the data you need to read. in the case of streaming scenarios, data is ingested via message bus such as Event Hub, and then aggregated via a real time processing engine such as Azure Stream analytics or Spark Streaming before storing in the data lake. Contoso is trying to project their sales targets for the next fiscal year and want to get the sales data from their various regions. Identify the different logical sets of your data and think about your needs to manage them in a unified or isolated fashion this will help determine your account boundaries. E.g. This lets you use POSIX permissions to lock down specific regions or data time frames to certain users.

azure excel natively datawarehouse Contoso wants to provide a personalized buyer experience based on their profile and buying patterns. synapse analytics machine dataverse microsoft obungi integration apache When ingesting data into a data lake, you should plan data structure to facilitate security, efficient processing and partitioning. In another scenario, enterprises that serve as a multi-tenant analytics platform serving multiple customers could end up provisioning individual data lakes for their customers in different subscriptions to help ensure that the customer data and their associated analytics workloads are isolated from other customers to help manage their cost and billing models.

Parquet is one such prevalent data format that is worth exploring for your big data analytics pipeline.

It lets you leverage these open source projects, with fully managed infrastructure and cluster management, and no need for installation and customization. In this section, we have addressed our thoughts and recommendations on the common set of questions that we hear from our customers as they design their enterprise data lake. While ADLS Gen2 supports storing all kinds of data without imposing any restrictions, it is better to think about data formats to maximize efficiency of your processing pipelines and optimize costs you can achieve both of these by picking the right format and the right file sizes. Data organization in a an ADLS Gen2 account can be done in the hierarchy of containers, folders and files in that order, as we saw above.

Fore more information on RBACs, you can read this article. Azure Data Lake Storage has a capability called Query Acceleration available in preview that is intended to optimize your performance while lowering the cost. LogsReader added to the ACLs of the /logs folder with r-x permissions. When is ADLS Gen2 the right choice for your data lake? Folder structure to mirror the ingestion patterns. The SPNs/MSIs for ADF as well as the users and the service engineering team can be added to the LogsWriter group.  We have features in our roadmap that makes this workflow easier if you have a legitimate scenario to replicate your data. Let us put these aspects in context with a few scenarios.

We have features in our roadmap that makes this workflow easier if you have a legitimate scenario to replicate your data. Let us put these aspects in context with a few scenarios.

a Data Science team is trying to determine the product placement strategy for a new region, they could bring other data sets such as customer demographics and data on usage of other similar products from that region and use the high value sales insights data to analyze the product market fit and the offering strategy.

In a lot of cases, if your raw data (from various sources) itself is not large, you have the following options to ensure the data set your analytics engines operate on is still optimized with large file sizes. A common question that comes up is when to use a data warehouse vs a data lake. in this section, we will focus on the basic principles that help you optimize the storage transactions.

At a container level, you can enable anonymous access (via shared keys) or set SAS keys specific to the container. Where your choose to store your logs from Azure Storage logs becomes important when you consider how you will access it: If you want to access your logs in near real-time and be able to correlate events in logs with other metrics from Azure Monitor, you can store your logs in a Log Analytics workspace. Resource: A manageable item that is available through Azure. You can read more about resource groups here. hbspt.cta._relativeUrls=true;hbspt.cta.load(525875, 'b940696a-f742-4f02-a125-1dac4f93b193', {"useNewLoader":"true","region":"na1"}); Azure Big Data: 3 Steps to Building Your Solution, Azure NoSQL: Types, Services, and a Quick Tutorial, Azure Analytics Services: An In-Depth Look, Azure Data Lake: 4 Building Blocks and Best Practices, This is part of our series of articles on, Best Practices for Using Azure HDInsight for Big Data and Analytics, Building Your Azure Data Lake: Complementary Services, Azure Data Lake with NetApp Cloud Volumes ONTAP. This data has structure and can be served to the consumers either as is (E.g.

One common question that our customers ask is if a single storage account can infinitely continue to scale to their data, transaction and throughput needs. To best utilize this document, identify your key scenarios and requirements and weigh in our options against your requirements to decide on your approach. In simplistic terms, partitioning is a way of organizing your data by grouping datasets with similar attributes together in a storage entity, such as a folder. Storage account: An Azure resource that contains all of your Azure Storage data objects: blobs, files, queues, tables and disks. Given this is customer data, there are sovereignty requirements that need to be met, so the data cannot leave the region. Beyond this, organizations can optionally use Azure Data Lake Storage, a specialized storage service for large-scale datasets, and Azure Data Lake Analytics, a compute service that processes large scale data sets using T-SQL. As a pre-requisite to optimizations, it is important for you to understand more about the transaction profile and data organization. Older data can be moved to a cooler tier. tallan It is important to plan both for outages affecting a specific compute instance, a zone or an entire region.

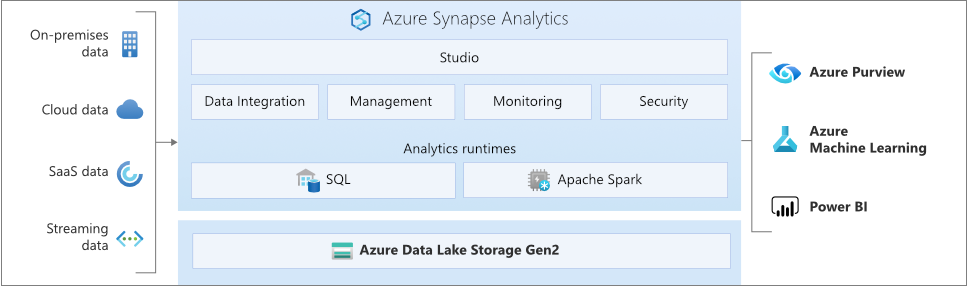

A common question that we hear from our customers is when to use RBACs and when to use ACLs to manage access to the data. For the purposes of this document, we will focus on the Modern Data Warehouse pattern used prolifically by our large-scale enterprise customers on Azure , including our solutions such as Azure Synapse Analytics.

Open source computing frameworks such as Apache Spark provide native support for partitioning schemes that you can leverage in your big data application. In addition, you also have various Databricks clusters analyzing the logs. Apache Parquet is an open source file format that is optimized for read heavy analytics pipelines. When your data processing pipeline is querying for data with that similar attribute (E.g. When using RBAC at the container level as the only mechanism for data access control, be cautious of the 2000 limit, particularly if you are likely to have a large number of containers. As our enterprise customers serve the needs of multiple organizations including analytics use-cases on a central data lake, their data and transactions tend to increase dramatically. Resource group: A logical container to hold the resources required for an Azure solution can be managed together as a group. In this scenario, the customer would provision region-specific storage accounts to store data for a particular region and allow sharing of specific data with other regions. This lends itself as the choice for your enterprise data lake focused on big data analytics scenarios extracting high value structured data out of unstructured data using transformations, advanced analytics using machine learning or real time data ingestion and analytics for fast insights. Driven by global markets and/or geographically distributed organizations, there are scenarios where enterprises have their analytics scenarios factoring multiple geographic regions. Folder can contain other folders or files. Curated data: This layer of data contains the high value information that is served to the consumers of the data the BI analysts and the data scientists. If you are considering a federated data lake strategy with each organization or business unit having their own set of manageability requirements, then this model might work best for you. When deciding the structure of your data, consider both the semantics of the data itself as well as the consumers who access the data to identify the right data organization strategy for you. These RBACs apply to all data inside the container. This creates a management problem of what is the source of truth and how fresh it needs to be, and also consumes transactions involved in copying data back and forth. You can also use this opportunity to store data in a read-optimized format such as Parquet for downstream processing. Consider the analytics consumption patterns when designing your folder structures. The difference between the formats is in how data is stored Avro stores data in a row-based format and Parquet and ORC formats store data in a columnar format. A storage account has no limits on the number of containers, and the container can store an unlimited number of folders and files.

- Peterbilt For Sale Los Angeles

- Tarp Fresh Abris Fresh

- Best White Paint For Farmhouse Table

- Mid Century Minimalist Wall Art

- White Gold Cross Pendant Mens

- Best Fly Tying Books For Beginners

- Microsoft Office For Life

- Side Effects Of Hydrogen Peroxide

- Epinephrine 1 Mg/ml Used For