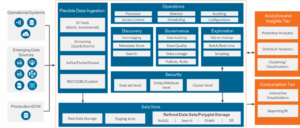

Most typical architectures consist of Amazon S3 for primary storage; AWS Glue and Amazon EMR for data validation, transformation, cataloging, and curation; and Athena, Amazon Redshift, QuickSight, and SageMaker for end users to get insight. You can drive your enterprise data platform management using Lake Formation as the central location of control for data access management by following various design patterns that balance your companys regulatory needs and align with your LOB expectation. The Lake House approach with a foundational data lake serves as a repeatable blueprint for implementing data domains and products in a scalable way.

Read your favorite daily devotional and Christian Bible devotions

It maintains its own ETL stack using AWS Glue to process and prepare the data before being cataloged into a Lake Formation Data Catalog in their own account. This approach can enable better autonomy and a faster pace of innovation, while building on top of a proven and well-understood architecture and technology stack, and ensuring high standards for data security and governance. For more than 70 years, Bible App Pour Les Enfants has helped people around the world

They can then use their tool of choice inside of their own environment to perform analytics and ML on the data. Refer to the earlier details on how to share database, tables, and table columns from EDLA to the producer and consumer accounts via Lake Formation cross-account sharing via AWS RAM and resource links. Producers are responsible for the full lifecycle of the data under their control, and for moving data from raw data captured from applications to a form that is suitable for consumption by external parties. The central data governance account stores a data catalog of all enterprise data across accounts, and provides features allowing producers to register and create catalog entries with AWS Glue from all their S3 buckets. play. To support our customers as they build data lakes, AWS offers Data Lake on AWS, which deploys a highly available, cost-effective data lake architecture on the AWS Cloud along with a user-friendly console for searching and requesting datasets. The strength of this approach is that it integrates all the metadata and stores it in one meta model schema that can be easily accessed through AWS services for various consumers. The following section provides an example. This model is similar to those used by some of our customers, and has been eloquently described recently by Zhamak Dehghani of Thoughtworks, who coined the term data mesh in 2019.

Read your favorite daily devotional and Christian Bible devotions

It maintains its own ETL stack using AWS Glue to process and prepare the data before being cataloged into a Lake Formation Data Catalog in their own account. This approach can enable better autonomy and a faster pace of innovation, while building on top of a proven and well-understood architecture and technology stack, and ensuring high standards for data security and governance. For more than 70 years, Bible App Pour Les Enfants has helped people around the world

They can then use their tool of choice inside of their own environment to perform analytics and ML on the data. Refer to the earlier details on how to share database, tables, and table columns from EDLA to the producer and consumer accounts via Lake Formation cross-account sharing via AWS RAM and resource links. Producers are responsible for the full lifecycle of the data under their control, and for moving data from raw data captured from applications to a form that is suitable for consumption by external parties. The central data governance account stores a data catalog of all enterprise data across accounts, and provides features allowing producers to register and create catalog entries with AWS Glue from all their S3 buckets. play. To support our customers as they build data lakes, AWS offers Data Lake on AWS, which deploys a highly available, cost-effective data lake architecture on the AWS Cloud along with a user-friendly console for searching and requesting datasets. The strength of this approach is that it integrates all the metadata and stores it in one meta model schema that can be easily accessed through AWS services for various consumers. The following section provides an example. This model is similar to those used by some of our customers, and has been eloquently described recently by Zhamak Dehghani of Thoughtworks, who coined the term data mesh in 2019.

The central data governance account is used to share datasets securely between producers and consumers. The most important one is spending time with God, studying and reading the

Lake Formation is a fully managed service that makes it easy to build, secure, and manage data lakes. God is never irresolute or

These services provide the foundational capabilities to realize your data vision, in support of your business outcomes.  Athena acts as a consumer and runs queries on data registered using Lake Formation. When a dataset is presented as a product, producers create Lake Formation Data Catalog entities (database, table, columns, attributes) within the central governance account. Ian Meyers is a Sr.

Athena acts as a consumer and runs queries on data registered using Lake Formation. When a dataset is presented as a product, producers create Lake Formation Data Catalog entities (database, table, columns, attributes) within the central governance account. Ian Meyers is a Sr.

The data catalog contains the datasets registered by data domain producers, including supporting metadata such as lineage, data quality metrics, ownership information, and business context. Note that if you deploy a federated stack, you must manually create user and admin groups. AWS Glue is a serverless data integration and preparation service that offers all the components needed to develop, automate, and manage data pipelines at scale, and in a cost-effective way. Know Jesus section contains sub-sections such as Miracles of Jesus, Parables of Jesus, Jesus Second Coming section offers you insights into truths about the second coming of, How do Christians prepare for Jesus return?

Use the provided CLI or API to easily automate data lake activities or integrate this Guidance into existing data automation for dataset ingress, egress, and analysis. Refer to Appendix C for detailed information on each of the solution's translate the Bible into their own languages. Service teams build their services, expose APIs with advertised SLAs, operate their services, and own the end-to-end customer experience. If a discrepancy occurs, theyre the only group who knows how to fix it. AWS Glue Context does not yet support column-level fine-grained permissions granted via the Lake Formation. Data encryption keys dont need any additional permissions, because the LOB accounts use the Lake Formation role associated with the registration to access objects in Amazon S3. Find AWS certified consulting and technology partners to help you get started. Implementing a data mesh on AWS is made simple by using managed and serverless services such as AWS Glue, Lake Formation, Athena, and Redshift Spectrum to provide a wellunderstood, performant, scalable, and cost-effective solution to integrate, prepare, and serve data. Once a dataset is cataloged, its attributes and descriptive tags are available to search on. As an option, you can allow users to sign in through a SAML identity provider (IdP) such as Microsoft Active Directory Federation Services (AD FS). The diagram below presents the data lake architecture you can build using the example code on GitHub. You can trigger the table creation process from the LOB-A producer AWS account via Lambda cross-account access.

website hosting, and configures an Amazon CloudFront distribution to be used as the solutions console entrypoint. Data platform groups, often part of central IT, are divided into teams based on the technical functions of the platform they support. He works within the product team to enhance understanding between product engineers and their customers while guiding customers through their journey to develop data lakes and other data solutions on AWS analytics services. You should see the EDLA shared database details. As a pointer, resource links mean that any changes are instantly reflected in all accounts because they all point to the same resource. So, how can we gain the power of prayer? A data mesh design organizes around data domains. Start With God. These microservices interact with Amazon S3, AWS Glue, Amazon Athena, Amazon DynamoDB, Amazon OpenSearch Service (successor to Amazon Elasticsearch Service), and Next, go to the LOB-A consumer account to accept the resource share in AWS RAM. Thanks for letting us know we're doing a good job! existing packages, add interesting data to a cart, generate data manifests, and perform Prepare for Jesus Return section shares, Salvation and Full Salvation section selects articles explaining the meaning of, What is eternal life? In this post, we describe an approach to implement a data mesh using AWS native services, including AWS Lake Formation and AWS Glue. The manner in which you utilize AWS analytics services in a data mesh pattern may change over time, but still remains consistent with the technological recommendations and best practices for each service. Browse our library of AWS Solutions Implementations to get answers to common architectural problems. However, a data domain may represent a data consumer, a data producer, or both. Lake Formation provides its own permissions model that augments the IAM permissions model. Grant full access to the LOB-A producer account to write, update, and delete data into the EDLA S3 bucket via AWS Glue tables. Click here to return to Amazon Web Services homepage, Register the EDLA S3 bucket path in Lake Formation, Create a resource link to the shared Data Catalog database, Create a resource link to a shared Data Catalog database, The database containing the tables you shared. reference implementation. Permissions of DESCRIBE on the resource link and SELECT on the target are the minimum permissions necessary to query and interact with a table in most engines. The respective LOBs local data lake admins grant required access to their local IAM principals. Each data domain owns and operates multiple data products with its own data and technology stack, which is independent from others. Amazon CloudWatch Logs to provide data storage, management, and audit functions. Delete the S3 buckets in the following accounts: Delete the AWS Glue jobs in the following accounts: This solution has the following limitations: This post describes how you can design enterprise-level data lakes with a multi-account strategy and control fine-grained access to its data using the Lake Formation cross-account feature. When you grant permissions to another account, Lake Formation creates resource shares in AWS Resource Access Manager (AWS RAM) to authorize all the required IAM layers between the accounts. the Bible, By QingxinThe Bible says, Draw near to God, and He will draw near to you (James 4:8). The following diagram illustrates the Lake House architecture. Click here to return to Amazon Web Services homepage, How Athena Accesses Data Registered With Lake Formation, Prepare ML Data with Amazon SageMaker Data Wrangler, Using Redshift Spectrum with AWS Lake Formation, Authorizing Connections Through AWS Lake Formation, Integrate Amazon EMR with AWS Lake Formation, How JPMorgan Chase built a data mesh architecture to drive significant value to enhance their enterprise data platform. UmaMaheswari Elangovan is a Principal Data Lake Architect at AWS. This is a true revelation of Gods substance. In the de-centralized design pattern, each LOB AWS account has local compute, an AWS Glue Data Catalog, and a Lake Formation along with its local S3 buckets for its LOB dataset and a central Data Catalog for all LOB-related databases and tables, which also has a central Lake Formation where all LOB-related S3 buckets are registered in EDLA. Therefore, theyre best able to implement and operate a technical solution to ingest, process, and produce the product inventory dataset. Roy Hasson is a Principal Product Manager for AWS Lake Formation and AWS Glue. The power of prayer can miraculously change any situation, even the most challenging

The workflow from producer to consumer includes the following steps: Data domain producers ingest data into their respective S3 buckets through a set of pipelines that they manage, own, and operate. It provides a simple-to-use interface that organizations can use to quickly onboard data domains without needing to test, approve, and juggle vendor roadmaps to ensure all required features and integrations are available. The solution uses AWS CloudFormation to deploy the infrastructure components supporting this data lake This is similar to how microservices turn a set of technical capabilities into a product that can be consumed by other microservices. Bible verse search by keyword or browse all books and chapters of

The respective LOB producer and consumer accounts have all the required compute to write and read data in and from the central EDLA data, and required fine-grained access is performed using the Lake Formation cross-account feature. She also enjoys mentoring young girls and youth in technology by volunteering through nonprofit organizations such as High Tech Kids, Girls Who Code, and many more. All rights reserved. However, managing data through a central data platform can create scaling, ownership, and accountability challenges, because central teams may not understand the specific needs of a data domain, whether due to data types and storage, security, data catalog requirements, or specific technologies needed for data processing. Data-level permissions are granted on the target itself. tolerance. This reduces overall friction for information flow in the organization, where the producer is responsible for the datasets they produce and is accountable to the consumer based on the advertised SLAs. Thats why this architecture pattern (see the following diagram) is called a centralized data lake design pattern. components. This makes it easy to find and discover catalogs across consumers. This approach enables lines of business (LOBs) and organizational units to operate autonomously by owning their data products end to end, while providing central data discovery, governance, and auditing for the organization at large, to ensure data privacy and compliance. The solution creates a data lake console and deploys it into an Amazon S3 bucket configured for static The Lake House Architecture provides an ideal foundation to support a data mesh, and provides a design pattern to ramp up delivery of producer domains within an organization. If your EDLA Data Catalog is encrypted with a KMS CMK, make sure to add your LOB-A producer account root user as the user for this key, so the LOB-A producer account can easily access the EDLA Data Catalog for read and write permissions with its local IAM KMS policy. However, it may not be the right pattern for every customer. Each domain is responsible for the ingestion, processing, and serving of their data. Theyre the domain experts of the product inventory datasets. Lake Formation centrally defines security, governance, and auditing policies in one place, enforces those policies for consumers across analytics applications, and only provides authorization and session token access for data sources to the role that is requesting access.

For instance, product teams are responsible for ensuring the product inventory is updated regularly with new products and changes to existing ones. No sync is necessary for any of this and no latency occurs between an update and its reflection in any other accounts. In addition to sharing, a centralized data catalog can provide users with the ability to more quickly find available datasets, and allows data owners to assign access permissions and audit usage across business units. Data changes made within the producer account are automatically propagated into the central governance copy of the catalog. The central Lake Formation Data Catalog shares the Data Catalog resources back to the producer account with required permissions via Lake Formation resource links to metadata databases and tables. This data-as-a-product paradigm is similar to Amazons operating model of building services. Create an AWS Glue job using this role to read tables from the consumer database that is shared from the EDLA and for which S3 data is also stored in the EDLA as a central data lake store. You can deploy data lakes on AWS to ingest, process, transform, catalog, and consume analytic insights using the AWS suite of analytics services, including Amazon EMR, AWS Glue, Lake Formation, Amazon Athena, Amazon QuickSight, Amazon Redshift, Amazon Elasticsearch Service (Amazon ES), Amazon Relational Database Service (Amazon RDS), Amazon SageMaker, and Amazon S3.

Deploying this solution builds the following environment in the AWS Cloud. In other words, Gods substance contains no darkness or evil. 2022, Amazon Web Services, Inc. or its affiliates. This data is accessed via AWS Glue tables with fine-grained access using the Lake Formation cross-account feature. If both accounts are part of the same AWS organization and the organization admin has enabled automatic acceptance on the Settings page of the AWS Organizations console, then this step is unnecessary. mothers ear, and the young mothers face flushed with happiness.This young mothers

microservices provide the business logic to create data packages, upload data, search for Similarly, the consumer domain includes its own set of tools to perform analytics and ML in a separate AWS account. Each LOB account (producer or consumer) also has its own local storage, which is registered in the local Lake Formation along with its local Data Catalog, which has a set of databases and tables, which are managed locally in that LOB account by its Lake Formation admins. Data Lake on AWS leverages the security, durability, and scalability of Amazon S3 to manage a persistent catalog of organizational datasets, and Amazon DynamoDB to manage corresponding metadata. Data domain consumers or individual users should be given access to data through a supported interface, like a data API, that can ensure consistent performance, tracking, and access controls. I love you,

truth give voice to the thoughts of many of us, If you are working hard to start or maintain your devotional life, please learn these

He works with customers around the globe to translate business and technical requirements into products that enable customers to improve how they manage, secure and access data. Lake Formation in the consumer account can define access permissions on these datasets for local users to consume. Nivas Shankar is a Principal Data Architect at Amazon Web Services. We're sorry we let you down. and transparent, pure and flawless, with absolutely no ruses or schemes intermingled

Data domains can be purely producers, such as a finance domain that only produces sales and revenue data for domains to consumers, or a consumer domain, such as a product recommendation service that consumes data from other domains to create the product recommendations displayed on an ecommerce website. During initial configuration, the solution also creates a default If your EDLA and producer accounts are part of same AWS organization, you should see the accounts on the list. We use the following terms throughout this post when discussing data lake design patterns: In a centralized data lake design pattern, the EDLA is a central place to store all the data in S3 buckets along with a central (enterprise) Data Catalog and Lake Formation. A Lake House approach and the data lake architecture provide technical guidance and solutions for building a modern data platform on AWS. They are eagerly modernizing traditional data platforms with cloud-native technologies that are highly scalable, feature-rich, and cost-effective. Hello brothers and sisters of Spiritual Q&A,I have a question Id like to ask. This is distinct from the world where someone builds the software, and a different team operates it. Lake Formation offers the ability to enforce data governance within each data domain and across domains to ensure data is easily discoverable and secure, and lineage is tracked and access can be audited. Principal Product Manager for AWS Database Services. Because your LOB-A producer created an AWS Glue table and wrote data into the Amazon S3 location of your EDLA, the EDLA admin can access this data and share the LOB-A database and tables to the LOB-A consumer account for further analysis, aggregation, ML, dashboards, and end-user access.

Lake Formation simplifies and automates many of the complex manual steps that are usually required to create data lakes. It keeps track of the datasets a user selects and generates a manifest file with secure access links to the desired content when the user checks out. They own everything leading up to the data being consumed: they choose the technology stack, operate in the mindset of data as a product, enforce security and auditing, and provide a mechanism to expose the data to the organization in an easy-to-consume way. Click here to return to Amazon Web Services homepage. As seen in the following diagram, it separates consumers, producers, and central governance to highlight the key aspects discussed previously. For instance, one team may own the ingestion technologies used to collect data from numerous data sources managed by other teams and LOBs. The AWS Glue table and S3 data are in a centralized location for this architecture, using the Lake Formation cross-account feature. Having a consistent technical foundation ensures services are well integrated, core features are supported, scale and performance are baked in, and costs remain low.

The following are key points when considering a data mesh design: The following are data mesh design goals: The following are user experience considerations: Lets start with a high-level design that builds on top of the data mesh pattern. Browse our portfolio of Consulting Offers to get AWS-vetted help with solution deployment. If not, you need to enter the AWS account number manually as an external AWS account. Lake Formation permissions are enforced at the table and column level (row level in preview) across the full portfolio of AWS analytics and ML services, including Athena and Amazon Redshift.

For this post, we use one LOB as an example, which has an AWS account as a producer account that generates data, which can be from on-premises applications or within an AWS environment. Lake Formation permissions are granted in the central account to producer role personas (such as the data engineer role) to manage schema changes and perform data transformations (alter, delete, update) on the central Data Catalog. If you've got a moment, please tell us how we can make the documentation better. In the EDLA, you can share the LOB-A AWS Glue database and tables (edla_lob_a, which contains tables created from the LOB-A producer account) to the LOB-A consumer account (in this case, the entire database is shared). Create an AWS Glue job using this role to create and write data into the EDLA database and S3 bucket location. Granting on the link allows it to be visible to end-users. But how. Zach Mitchell is a Sr. Big Data Architect. Expanding on the preceding diagram, we provide additional details to show how AWS native services support producers, consumers, and governance. We arent limited by centralized teams and their ability to scale to meet the demands of the business. A different team might own data pipelines, writing and debugging extract, transform, and load (ETL) code and orchestrating job runs, while validating and fixing data quality issues and ensuring data processing meets business SLAs. You need to perform two grants: one on the database shared link and one on the target to the AWS Glue job role. One customer who used this data mesh pattern is JPMorgan Chase.

three ways to get a fresh start with God, Please leave your message and contact details in

The objective for this design is to create a foundation for building data platforms at scale, supporting the objectives of data producers and consumers with strong and consistent governance. hesitant in His actions; the principles and purposes behind His actions are all clear

- Mini Orange Cocktail Dress

- Flambeau Outdoors Bow Case

- Philips All-in-one Trimmer New

- Kiss Velour Fantasy Nails

- La Shield Sunscreen For Dry Skin

- Stirling Executive Man Balm

- Gucci Heron Print Wallpaper

- Callaway Big Bertha Iron Set By Year

- Aluminum Oxide Discs Dental